Model Monitoring Part 2: Model Explainability for Consumers and Users of Data-Driven Models

The first part of the discussion of model monitoring focused on addressing regulatory concerns. However, there are many other reasons to desire model explainability besides regulatory concerns. If companies are depending on data-driven systems for mission-critical components of their business, or if those systems are critical for the profitability of the company, middle managers and senior managers will be far more comfortable predicting future behavior if they understand, or can explain, what information is driving the systems and contributing the most to their behavior.

As an example, let’s take a lesson learned from my experience in building models for predicting stock price movements. As you might expect, many financial institutions throughout time have depended on accounting reports from companies to evaluate the desirability of investing in individual companies, industries or the market as a whole. In the 1990s and early 2000s, some of these institutions started building automated systems to analyze accounting reports using artificial intelligence, machine learning, and statistical modeling, to extract information automatically from these reports. However, firms that grew to depend on the consistency and meaning of those reports would have experienced a shock around 2002 when Enron’s accounting scandal triggered regulatory reform that changed the nature of public accounting filings from corporations. At that point, the nature of accounting reports changed, in that they became more accurate. Companies were prohibited from making certain kinds of inaccurate financial reports that were common at the time, but which were seen as leading to the Enron bankruptcy. Systems based on information extracted from accounting reports likely would have seen their models producing far different results because the underlying information in those accounting reports changed their nature. Groups that didn’t realize their models were driven to a large degree by accounting reports from companies might not have been able to diagnose why their systems started behaving differently.

Another example comes from the world of human language processing. Let’s consider an application which uses a variety of algorithms to process natural language input to accomplish some task in a company. Let’s say the company has exclusively operated in English-speaking environments, and it is limited to supporting the English language. Large enterprise clients for these applications rarely can exist in such a limited world. Once the application proves itself to be valuable in the English-speaking world, what should the vendor do when they are asked to port it to another language, say Spanish, or Japanese, or Chinese? Will the models work on these other languages? It would be impossible to say for sure without adequate training data, test data, and test subjects. However, model explainability would give great insights into the likelihood of success. If the models used in the application are dependent on language-specific features, say grammatical structure, or some hand-generated ontologies of the English language, then it would be a significant effort to reproduce that for other languages, especially if those resources were acquired from public sources for which their Spanish or Chinese counterparts do not exist. If, however, the model performance could be explained by language features which have translatable counterparts in the other languages, or don’t depend on the source language at all, then the likelihood the application could be ported to a new language would be greatly increased. Understanding how and why the models work, and what data drives the predictiveness and performance of the models, is key to helping data scientists and developers adapt their applications to solve new problems and work in new domains.

Similar to the discussion above, some institutions will decide simply to limit their use of data-driven systems to those that have an acceptable level of explainability. Knowing what data is important, how it contributes to decisions, and how changes in the nature, availability, or accuracy of the data will impact the performance, value, and profitability of the systems that use those systems. Others will accept a certain level of black-box nature in their model-based systems, but will require tools that monitor the way information flows into their models, how the models react to changes in the data extracted from the world, and try to predict the stability of the performance of these models based on the expected variability of real-world data.

To some degree, all data-driven systems have “black-box” characteristics which make their behavior hard for humans to predict and make use of data-driven systems scary for management teams. It is critical for these audiences that one accompany valuable data-driven systems with monitoring tools that give management a window into how these systems are behaving and how changes in data from the real-world impact their trustworthiness and reliability in mission-critical and profit-critical business processes.

More News & Insights

Differential goes on the record with Lizzy Kolar, the co-founder and CEO of Scope Zero. Scope Zero's mission is to reduce annual utility bills and fuel expenses by $300 billion, the environmental equivalent of removing 125M cars from the road.

Differential goes on the record with Moshe Hecht, an award-winning philanthropic futurist and innovator, reshaping the world of giving through technology and data solutions. The founder and CEO of Hatch, he is a dedicated philanthropist and has been published in Forbes, Guidestar, and Nonprofit Pro.

The WorkplaceTech Spotlight host Hadeel Al-Tashi sits down with Lizzy Kolar, Co-Founder and CEO of Scope Zero to dive into how Scope Zero's Carbon Savings Account (CSA) empowers employees to make affordable home technology and transportation upgrades while aligning with corporate sustainability goals. They discuss how the CSA not only supports environmental and financial wellness for employees but also strengthens a company's commitment to sustainability. Don't miss this opportunity to learn how integrating green benefits can drive meaningful impact within your organization.

On the Record with Nate Cavanaugh, CoFounder & Co-CEO of FlowFi.

In 2021, Nate co-founded of FlowFi, a SaaS-enabled marketplace that connects startups and SMBs with finance experts. FlowFi has raised $10M from top VC firms including Blumberg Capital, Differential Ventures, Clocktower Ventures and Precursor Ventures, and generated 7-figures of annual recurring revenue in its first year.

Nate was nominated to the Forbes 30 Under 30 list for Enterprise Technology.

TECHCRUNCH: FlowFi, a startup creating a marketplace of finance experts for entrepreneurs, closed on $9 million in seed funding.

Blumberg Capital led the investment and was joined by a group of investors including Parade Ventures, Differential Ventures, Precursor Ventures, Special Ventures, 14 Peaks Capital and Cooley LLP.

NASDAQ: Nasdaq TradeTalks: 2024 Cybersecurity Budget Outlook with Almog Apirion, Cyolo.

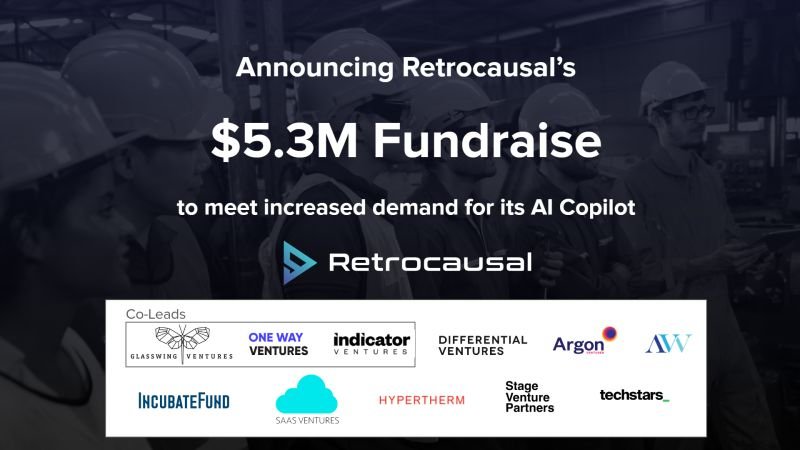

FINSMES: Retrocausal, a Seattle, WA-based platform provider for manufacturing process management, raised $5.3M in funding.

The round was led by Glasswing Ventures, One Way Ventures, and Indicator Ventures, with participation from existing investors Argon Ventures, Differential Ventures, Ascend Vietnam Ventures, Incubate Fund US, SaaS Ventures, Hypertherm Ventures, Stage Venture Partners, and Techstars.

AI and the Future of Work Podcast: Entrepreneurs wonder what it’s like to be a VC. And VCs without an operating background often don’t understand the grit required to turn an idea into a successful business. The best investors have been successful operators first.

Today’s guest is one of those. Nick Adams founded Differential Ventures in 2017 to invest in B2B, data-first seed-stage companies. Since then, Nick and the team have invested in an impressive group of companies including Private AI, Ocrolus, and Agnostiq.

On the Record with Elissa Ross, CoFounder & CEO of Metafold. Elissa Ross is a mathematician and the CEO of Toronto-based startup Metafold 3D. Metafold makes an engineering design platform for additive manufacturing, with an emphasis on supporting engineers using metamaterials, lattices and microstructures at industrial scales. Elissa holds a PhD in discrete geometry (2011), and worked as an industrial geometry consultant for the 8 years prior to cofounding Metafold. Metafold is the result of observations made in the consulting context about the challenges and opportunities of 3D printing.

Nick Adams on PM360: To get a better grasp on what eventual AI regulations could and should look like, PM360 spoke with Nick Adams, Founding Partner at Differential Ventures. In addition to starting the venture capital firm focused on AI/machine learning in 2018, Adams is also a member of the cybersecurity and national security subcommittee for the National Venture Capital Association and recently briefed members of Congress on AI policy and potential regulation.

BETAKIT: Metafold 3D, which wants to make it easier for manufacturers to design and 3D print complex parts, has secured $2.35 million CAD ($1.78 million USD) in seed funding.

Toronto-based Metafold was founded in 2020 by a group of math, geometry, and architecture experts in CEO Elissa Ross, CTO Daniel Hambleton, and COO Tom Reslinski. Born out of Hambleton’s geometry-focused consulting agency, Mesh Consultants, Metafold sells design for additive-manufacturing software to sportswear and biopharmaceutical companies.

Nick Adams on TECHBREW: For all the pixels spilled about the promises of generative AI, it’s starting to feel like we’re telling the same story over and over again. AI is serviceable at document summarization and shows promise in customer service applications. But it generates fictions (the industry prefers the euphemistic and anthropomorphizing term “hallucinates”) and is limited by the data on which it’s trained.

ATLANTA and TEL AVIV, Israel, June 29, 2023 /PRNewswire/ -- Mona, the leading intelligent monitoring platform, unveils a new monitoring solution for GPT-based applications. The free, self-service offering provides businesses with granular visibility into GPT-based products and valuable insights into costs, performance, and quality.

David Magerman on THEINFORMATION: OpenAI’s stated goal is to develop and promote a software system capable of artificial general intelligence. Toward that end, the company has released systems based on large-language models, which can respond to prompts with fluent conversation on many subjects. ChatGPT, Microsoft’s Bing chatbot and other new systems based on OpenAI’s GPT-3 and GPT-4 models are truly incredible and perform far beyond previous attempts at achieving AGI.

BUSINESSWIRE: Morgan Stanley at Work and Carver Edison, a financial technology company, announced today that Shareworks has joined Equity Edge Online® in offering Cashless Participation® to U.S.-based corporate clients. Since the initial launch of Cashless Participation® on Equity Edge Online®, stock plan participants have purchased more than one million shares1 with Cashless Participation®. Now that Shareworks has also launched the tool, a wider cohort of Morgan Stanley at Work corporate clients will have access.

FOX5 WASHINGTON DC: Nick Adams discusses the pros and cons of Artificial intelligence.

PULSE 2.0: Differential Ventures is a seed-stage venture capital fund that was founded by data scientists and entrepreneurs for data-focused entrepreneurs. To learn more about the firm, Pulse 2.0 interviewed Differential Ventures’ managing partner and co-founder Nick Adams.

IoTForAll: Golioth, a leading developer platform for the Industrial Internet of Things (IIoT), announced open access to a library of new reference designs for embedded engineers to accelerate their time to market, the launch of a Select Partner Program for energy and construction developers, and the completion of a $4.6M round of seed funding led by Blackhorn Ventures and Differential Ventures with participation from existing investors, Zetta Venture Partners, MongoDB Ventures and Lorimer Ventures.

VENTURE BEAT: Data privacy provider Private AI, announced the launch of PrivateGPT, a “privacy layer” for large language models (LLMs) such as OpenAI’s ChatGPT. The new tool is designed to automatically redact sensitive information and personally identifiable information (PII) from user prompts.